Testing LR schedule#

import torch

import matplotlib.pyplot as plt

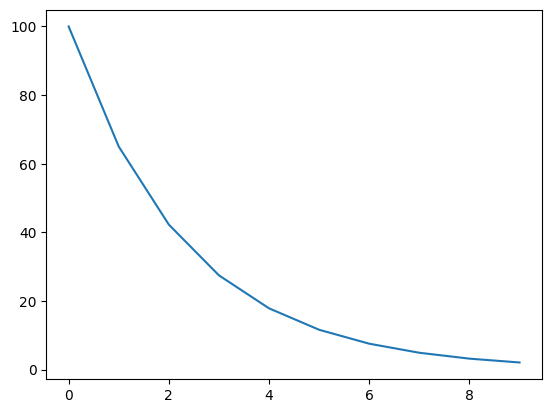

Lambda LR#

set the learnign rate of each parameter group to initial lr times a given function. When last_epoch=-1, sets initial lr as lr

model = torch.nn.Linear(2, 1)

optimizer = torch.optim.SGD(model.parameters(), lr=100)

lambda1 = lambda epoch: 0.65 ** epoch

scheduler = torch.optim.lr_scheduler.LambdaLR(optimizer, lr_lambda=lambda1)

lrs = []

for i in range(10):

optimizer.step()

lrs.append(optimizer.param_groups[0]["lr"])

# print("Factor = ", round(0.65 ** i,3)," , Learning Rate = ",round(optimizer.param_groups[0]["lr"],3))

scheduler.step()

plt.plot(range(10),lrs)

[<matplotlib.lines.Line2D at 0x7f7c3dfc59f0>]

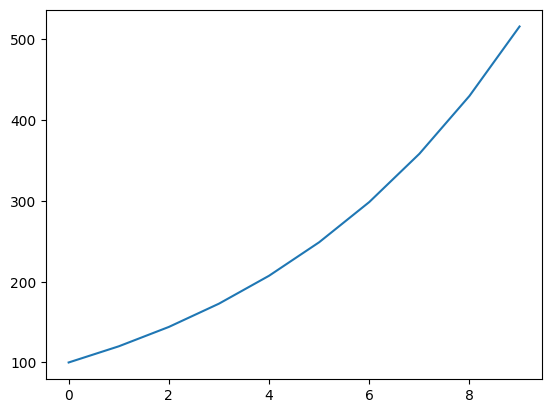

Exponential LR#

model = torch.nn.Linear(2, 1)

optimizer = torch.optim.SGD(model.parameters(), lr=100)

scheduler = torch.optim.lr_scheduler.ExponentialLR(optimizer, gamma=1.2)

lrs = []

for i in range(10):

optimizer.step()

lrs.append(optimizer.param_groups[0]["lr"])

# print("Factor = ",0.1," , Learning Rate = ",optimizer.param_groups[0]["lr"])

scheduler.step()

plt.plot(lrs)

[<matplotlib.lines.Line2D at 0x7f7c3bd38e20>]

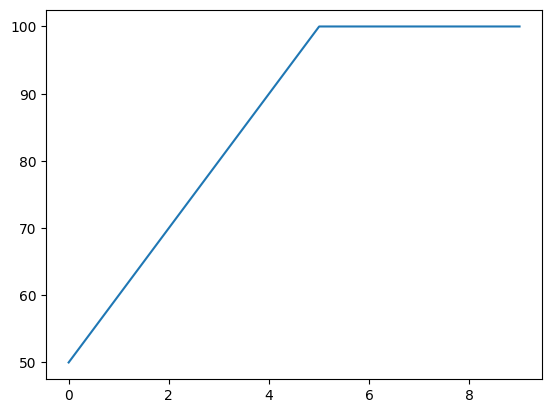

LinearLR#

model = torch.nn.Linear(2, 1)

optimizer = torch.optim.SGD(model.parameters(), lr=100)

scheduler = torch.optim.lr_scheduler.LinearLR(optimizer, start_factor=0.5, total_iters=5)

lrs = []

for i in range(10):

optimizer.step()

lrs.append(optimizer.param_groups[0]["lr"])

# print("Factor = ",0.1," , Learning Rate = ",optimizer.param_groups[0]["lr"])

scheduler.step()

plt.plot(lrs)

[<matplotlib.lines.Line2D at 0x7f7c3bc1cbb0>]